Synchronize mobile app events into BigQuery

Firestore is perfect for your mobile and web apps, but you need the power of BigQuery to analyze and audit smaller amounts of frequently pushed data that isn’t consumed by the client applications. For instance, say you wanted to count how many times a customer taps a button in your mobile app or what features a user is using so you can provide better customer support by understanding their actions and engagement.

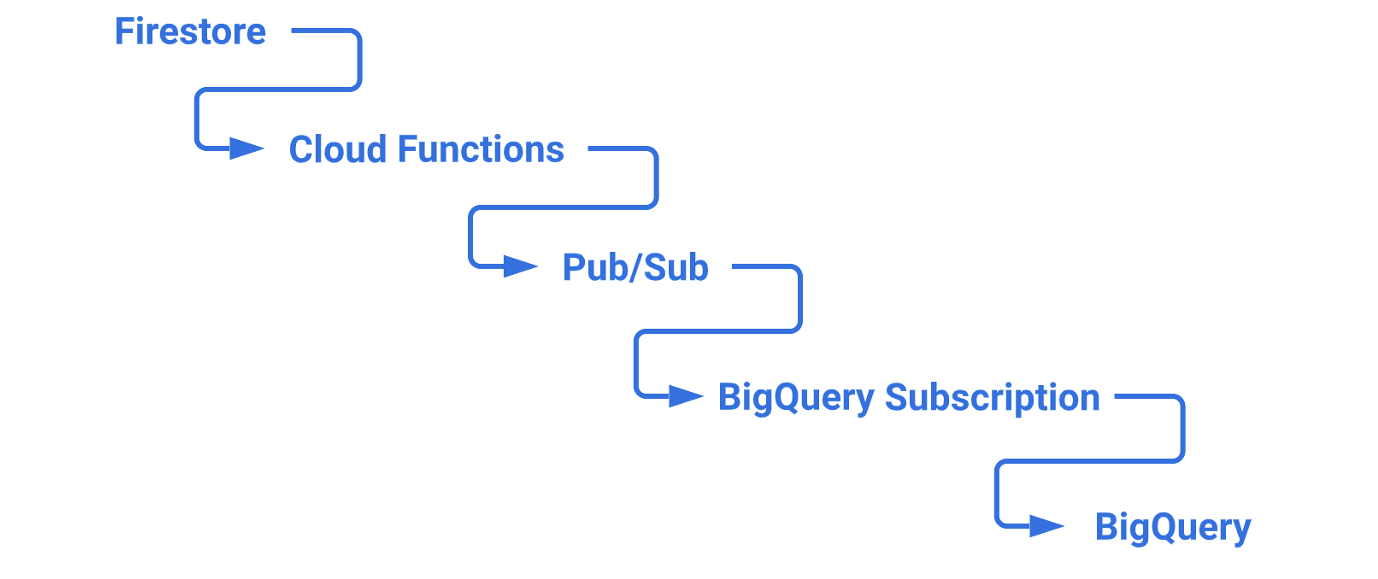

In this blog post, we will walk through the synchronization of Firestore data into BigQuery. We will implement a Clould Function that is triggered by document create events and publishes the document data to a Pub/Sub topic. Pub/Sub will allow for future expansion and a recently added feature of Pub/Sub, called BigQuery Subscriptions, makes it simple to get the data into BigQuery.

Here are the Google Cloud features we will be covering and the process flow:

Firestore ➔ Cloud Functions ➔ Pub/Sub ➔ BigQuery Subscriptions ➔ BigQuery

Part One: Firestore and Cloud Function

Let’s break this blog up into two parts. First, we will verify the Cloud Function is being triggered by Firestore document create events and getting the data. Second, we will set up Pub/Sub and BigQuery and change the Cloud Function to publish the data.

Enable and set up Firestore

In your Google Cloud project (GCP), enable Firestore, choose native mode, set the region you prefer and create a collection named messages.

For our prototyping here, set the Security Rules to the following:

|

|

Note: We are omitting Firestore Security Rules for this article. This is not something you should do in your production systems! See the security rules documentation for how to set these up for your project.

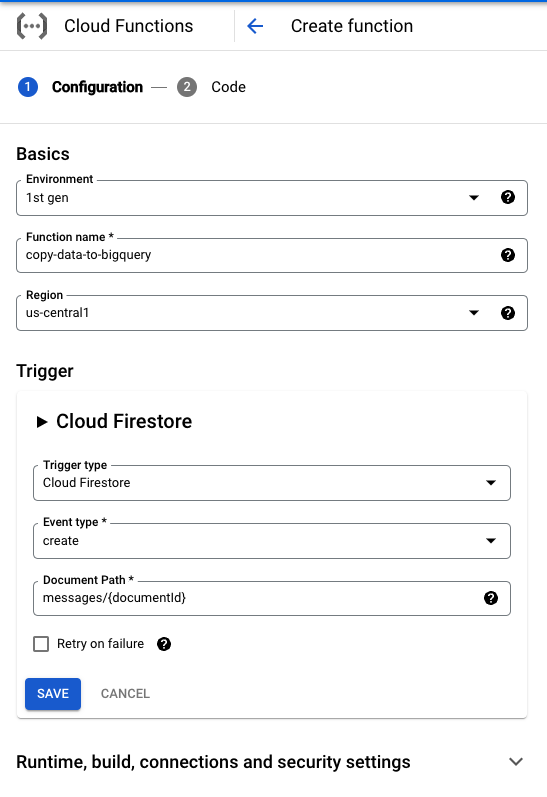

Create a Cloud Function with the basics

In GCP, enable Cloud Functions and create a function with the following properties:

- Environment:1st gen

- Name: copy-data-to-bigquery

- Region: same region used for Firestore

- Trigger type: Cloud Firestore

- Event type: create

- Document Path: messages/{documentId}

Note: We are using 1st generation Cloud Functions because 2nd generation uses EventArc and as we reported earlier in a medium post, EventArc doesn’t work well with Firestore events.

For the Cloud Function code, choose the following settings:

- Runtime: Node.js 16

- Entry point: publishToBigQuery

- Copy the following code into

index.js:

|

|

Copy the following code into package.json:

|

|

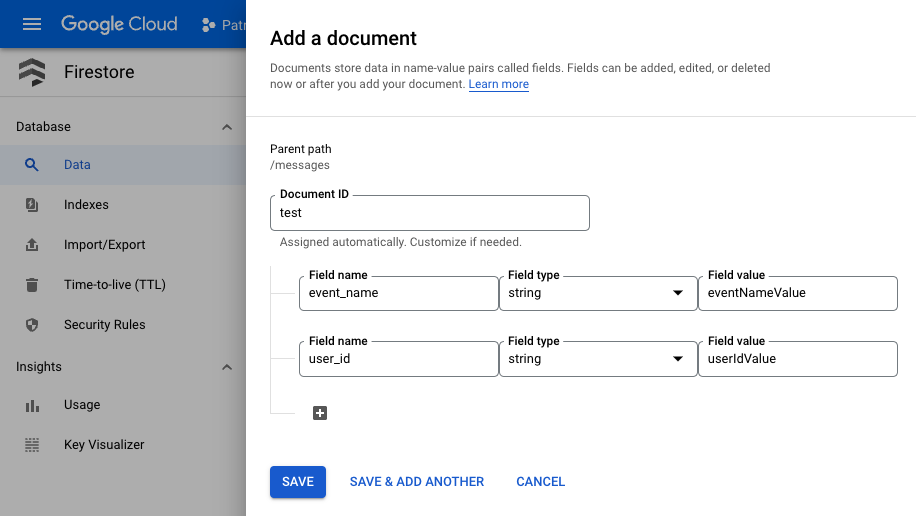

Create some data in Firestore

In Firestore, select the messages collection and add a document with the following properties:

- Document ID: test

- Field Name: event_name, Field value: eventNameValue

- Field Name: user_id, Field value: userIdValue

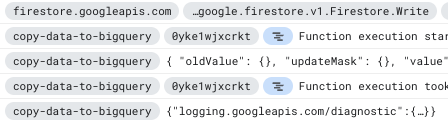

See output from Cloud Function in Logs Explorer

In GCP, go to Logging and click the Stream logs button.

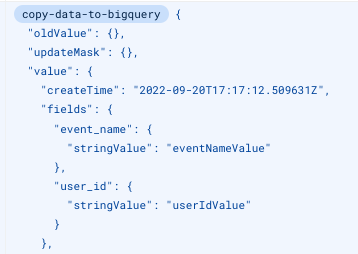

After some time, you should see the Firestore write event come across and see the event JSON output from the Cloud Function similar to the following:

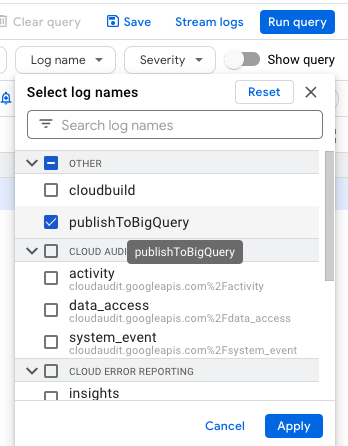

To filter out some noise and just get the logging output from the Cloud Function, click the Log Name button, check publishToBigQuery and click Apply.

You can also use the Resource button to filter the logs, if you’d prefer, but creating our own log has some value.

Expanding the log entry from the Cloud Function, you should see the following:

Part Two: Publishing data to BigQuery

Now that we have the plumbing in place to trigger the Cloud Function from Firestore, we will create a Pub/Sub topic, make a BigQuery Subscription and modify the Cloud Function to publish to the topic.

Enable and set up BigQuery

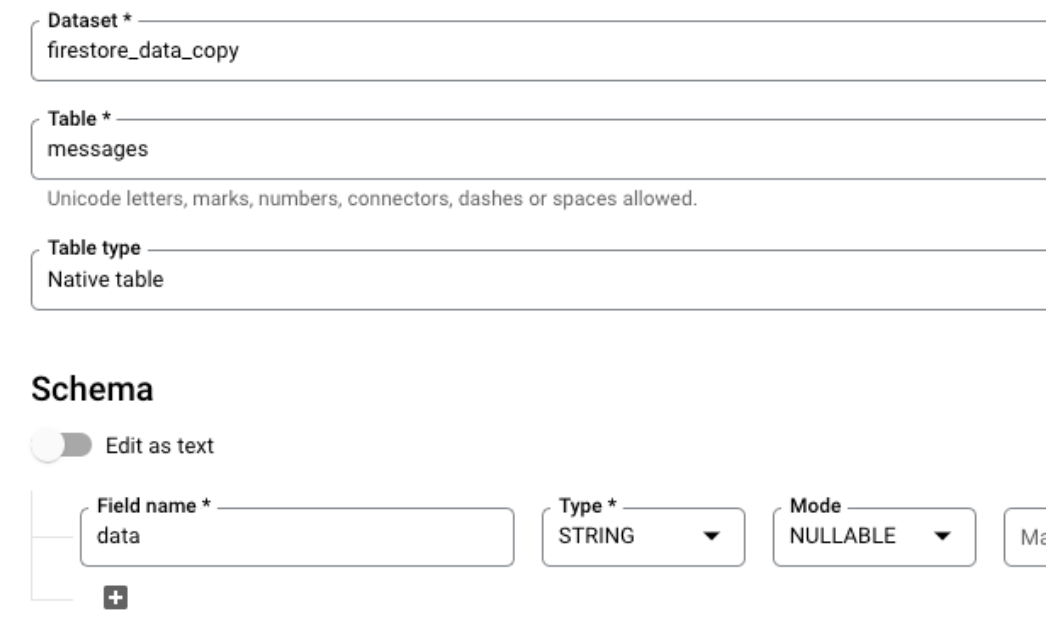

In GCP, go to BigQuery and create a Dataset with the following properties:

- Dataset ID: firestore_data_copy

- Data location: Same region used for Cloud Functions and Firestore

Then create a table by selecting the dataset and choosing Create table from the three-dot menu and use the following properties:

- Table: messages

- Schema: Field Name: data, Type: STRING

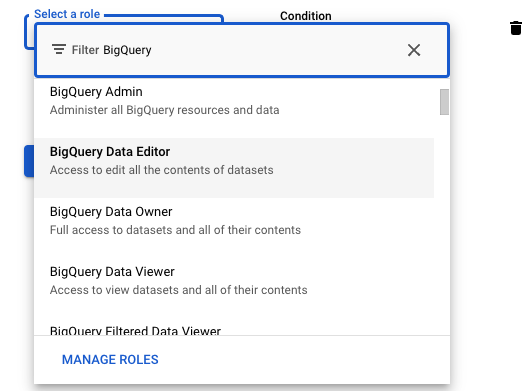

Add BigQuery Data Editor role to Pub/Sub service account

In order for Pub/Sub BigQuery Subscriptions to create data in BigQuery written to a Pub/Sub topic, we have to give the Pub/Sub service account some permissions. To do this, perform the following steps:

- In GCP, go to IAM & Admin.

- Click checkbox Include Google-provided role grants on the top-right above the table.

- Click on pencil icon for the Cloud Pub/Sub Service Account.

- Click on ADD ANOTHER ROLE button.

- Type BigQuery in the Filter field.

- Choose BigQuery Data Editor from the list.

- Click Save.

Create Pub/Sub topic

In GCP, go to Pub/Sub and create a topic with the following properties:

- Topic ID: firestore-to-BQ-data-field

- Leave the defaults and click CREATE TOPIC.

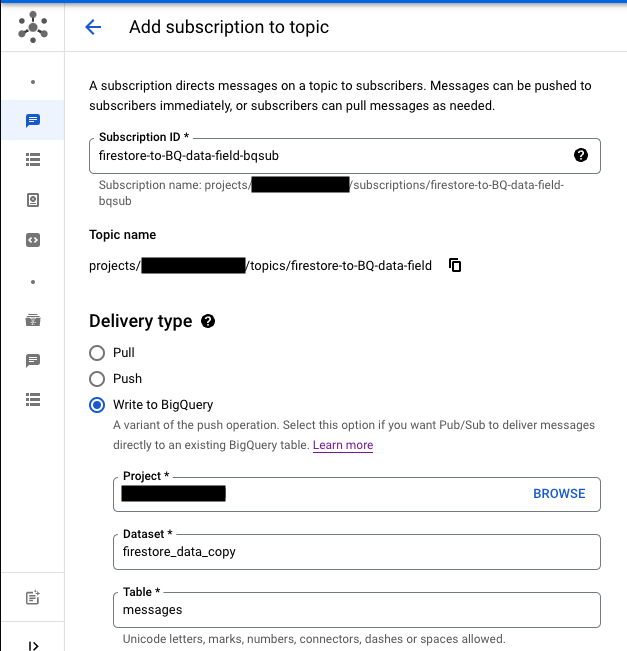

Create BigQuery Subscription

After the Topic is created, click on the Export to BigQuery button, keep the defaults, click CONTINUE and use the following properties:

- Subscription ID: firestore-to-BQ-data-field-bqsub

- Dataset: firestore_data_copy

- Table: messages

Leave everything else as defaults.

Update the Cloud Function code to write to Pub/Sub topic

In GCP, go to Cloud Functions, click on copy-data-to-bigquery in the list and click the EDIT toolbar button.

Click Next and copy the following code into index.js:

|

|

Copy the following code into package.json:

|

|

Click DEPLOY.

Create document in Firestore

Now, it’s time to test out the rest of the plumbing by creating a document in Firestore and see the value of that document get placed into BigQuery.

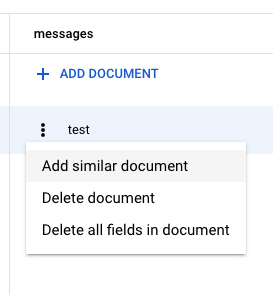

In GCP, go to Firestore, click the three-dot menu next to the test document in the messages collection and choose Add similar document.

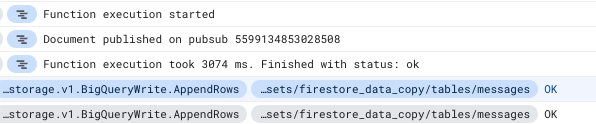

Click Save, then in GCP, go to Logging > Logs Explorer and wait til you see log entries from the Cloud Function as well as BigQueryWrite.AppendRows entries similar to the following:

See the results in BigQuery

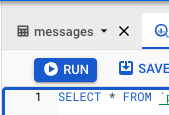

Once the log entries appear, go to BigQuery and do the following:

- In GCP, go to BigQuery and select messages table from the left navigation tree.

- Click the Query drop-down button, and choose In new tab to add a new editor tab.

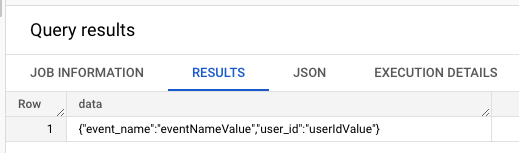

- In the tab, type * between the words SELECT and FROM as shown below and click RUN.

In the Query results section that appears, you should see the data you entered into Firestore.

Summary and next steps

While it might seem silly to have the raw JSON data from the Firestore event in a data column in BigQuery, it has added benefit of not requiring future updates when consuming data. Lee Doolan’s medium post covers the pros and cons of using topic schemas, so check that out if you want to learn more.

However, we’ve wrote another blog post that will walk through how to populate specific table columns based on the JSON data.

Thanks for reading and I hope this blog has helped you. Keep us in mind for all your mobile application needs.

About Atomic Robot

Atomic Robot brings together the best developers and creators to deliver custom-crafted mobile solutions for iOS, Android, and emerging technologies.

Please reach out to us at [email protected] or go to https://atomicrobot.com/contact/.